Registering human meshes to 3D point clouds is essential for applications such as augmented reality and human-robot interaction but often yields imprecise results due to noise and background clutter in real-world data. We introduce a hybrid approach that incorporates body part segmentation into the mesh fitting process, enhancing both human pose estimation and segmentation accuracy. Our method first assigns body part labels to individual points, which then guide a two-step SMPL-X fitting: initial pose and orientation estimation using body part centroids, followed by global refinement of the point cloud alignment. By leveraging semantic information from segmentation, our approach ensures robust alignment even with occlusions or missing data. Furthermore, it generalizes across diverse datasets without requiring extensive manual annotations or dataset-specific tuning. Additionally, we demonstrate that the fitted human mesh can refine body part labels, leading to improved segmentation. Evaluations on the challenging real-world datasets InterCap, EgoBody, and BEHAVE show that our approach significantly outperforms prior methods in both pose estimation and segmentation accuracy.

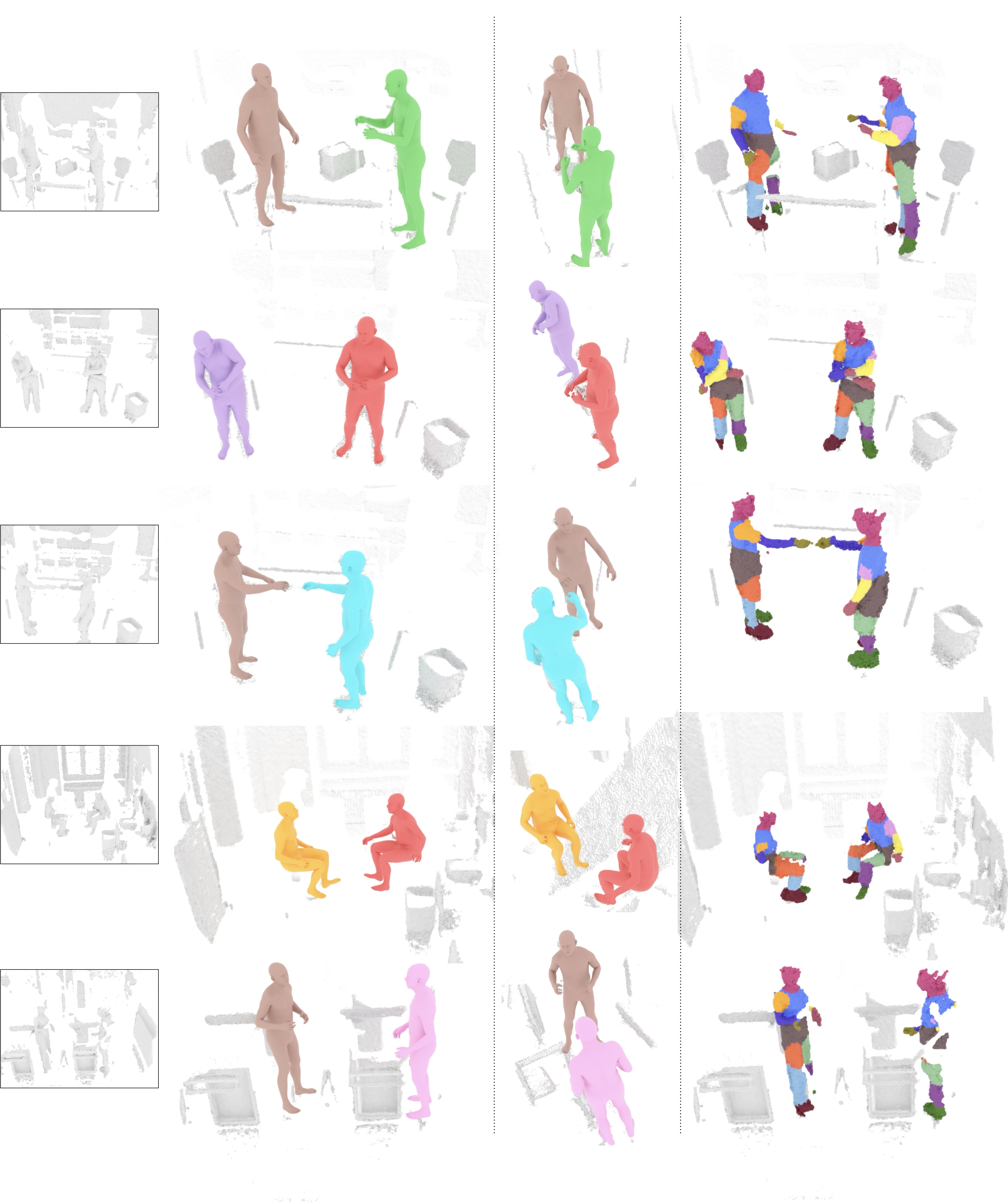

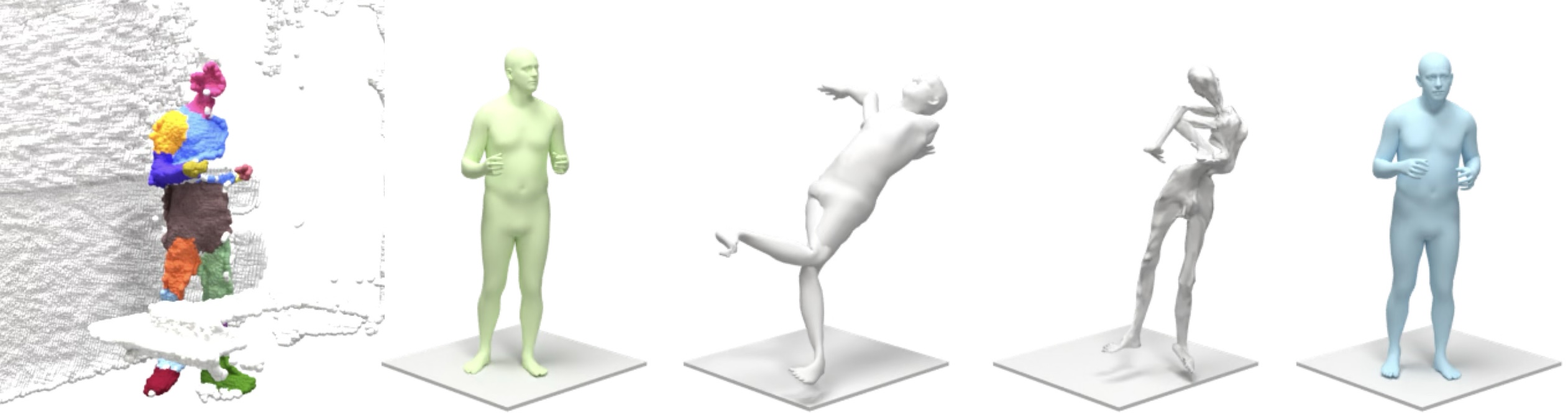

Example outputs of SegFit on the EgoBody dataset. From left to right: the input single-view point cloud showing the full scene including multiple humans, clutter and background, registered human meshes by SegFit from the front and side perspective, the refined body-part segmentation by SegFit.